Chapter 7: Image Processing & Analysis#

Images are a major data format in chemistry and other sciences. They can be electron microscope images of a surface, photos of a reaction, or images from fluorescence microscopy. Image processing and analysis can be performed using software like Photoshop or GIMP, but this can be tedious and subjective when done manually. A better alternative is to have software automate the entire process to provide consistent, precise, and objective processing of images and taking measurements of their features.

Among the more popular Python libraries for performing scientific image analysis is scikit-image. This is a library specifically designed for scientific image analysis and includes a wide variety of tools for the processing and extracting information from images. Examples of tools in scikit-image include functions for boundary detection, object counting, entropy quantification, color space conversion, image comparison, and many others. Even though there are other Python libraries for working with images, such as pillow, scikit-image is designed for scientific image analysis while pillow is intended for more fundamental operations such as image rotation and cropping.

Like SciPy, scikit-image stores most of its functions in modules, so it is common to import modules individually. For example, if the user wants to import the color module, it is imported using the following code.

from skimage import color

Multiple modules can also be imported in a single import such as below. A list of modules and their description are shown in Table 1, and additional information can be found on the project website at http://scikit-image.org/.

from skimage import color, data, io

We can also import a single function from a module using the following code structure.

from skimage.module import function

Table 1 Scikit-Image Modules

Module |

Description |

|---|---|

|

Converts images between color spaces |

|

Provides sample images |

|

Generates coordinates of geometric shapes |

|

Examines and modifies image exposure levels |

|

Handles reading, writing, and visualizing TIFF files |

|

Feature detection and calculation |

|

Contains various image filters and functions for calculating threshold values |

|

Returns localized measurements in the image. |

|

Finds optimized paths across the image |

|

Supports reading and writing images |

|

Performs a variety of measurements and calculations on or between two images |

|

Generates objects of a specified morphology |

|

Provides simple image functions for beginners |

|

Includes image restoration tools |

|

Identifies boundaries in an image |

|

Performs image transformations including scaling and image rotation |

|

Converts images into different encodings (e.g., floats to integers) and other modifications such as inverting the image values and adding random noise to an image |

|

Image viewer tools |

This chapter assumes the following imports. Because we will be doing some plotting, this includes the following matplotlib import and that inline plotting is enabled. In addition, there are functions inside scikit-image that are not in a module, so we also need to import skimage as well.

import matplotlib.pyplot as plt

import skimage

from skimage import data, io, color

Despite the power and utility of the scikit-image library, there is a significant amount of image processing and analysis that can be performed using NumPy functionality. This is especially true since scikit-image imports/stores images as NumPy arrays.

7.1 Basic Image Structure#

Most images are raster images, which are essentially a grid of pixels where each location on the grid is a number describing that pixel. If the image is a grayscale image, these values represent how light or dark each pixel is; and if it is a color image, the value(s) at each location describe the color. Figure 1 shows a grayscale photo of a flask containing crystals, with a 10 \(\times\) 10 pixel excerpt showing the brightness values from the photo. While there is another major class of images known as vector images, we will restrict ourselves to dealing with raster images in this chapter as primary scientific data tend to be raster images.

Figure 1 An excerpt of values from a grayscale image showing values representing the brightness of each pixel.

7.1.1 Loading Images#

The scikit-image library includes a data module containing a series of images for the user to experiment with. To display images in the notebook, use the matplotlib plt.imshow() function. Each image in the data module has a function for fetching the image, and you can find a complete list of images/functions in the data module by typing help(data). We will open and view the image of a grayscale lunar surface using the data.moon() function.

Warning

The scikit-image io.imshow() function is being deprecated and will be removed in version 0.27. If you have used this function in the past, consider using matplotlib’s plt.imshow() instead.

moon = data.moon()

plt.imshow(moon);

The image does not look like a grayscale image because matplotlib is treating the image as data (see section 7.1.4). Use cmap='gray', vmin=0, vmax=255 to make the image look like a grayscale image.

Tip

If the image turns out black, you probably need to change vmax=1. See section 7.2.2 for a discussion on encoding.

moon = data.moon()

plt.imshow(moon, cmap='gray', vmin=0, vmax=255);

If we take a closer look at the data contained inside the lunar surface image, we find a two-dimensional NumPy array filled with integers ranging from 0 \(\rightarrow\) 255.

moon

array([[116, 116, 122, ..., 93, 96, 96],

[116, 116, 122, ..., 93, 96, 96],

[116, 116, 122, ..., 93, 96, 96],

...,

[109, 109, 112, ..., 117, 116, 116],

[114, 114, 113, ..., 118, 118, 118],

[114, 114, 113, ..., 118, 118, 118]], shape=(512, 512), dtype=uint8)

Each of these values represents a lightness value where 0 is black, 255 is white, and all other values are various shades of gray. To manipulate the image, we can use NumPy methods, since scikit-image stores images as ndarrays. For example, the image can be darkened by dividing all the values by two. Because this array is designated to contain integers (dtype = uint8), integer division (//) is used to avoid floats.

moon_dark = moon // 2

plt.imshow(moon_dark, cmap='gray', vmin=0, vmax=255);

7.1.2 Color Images#

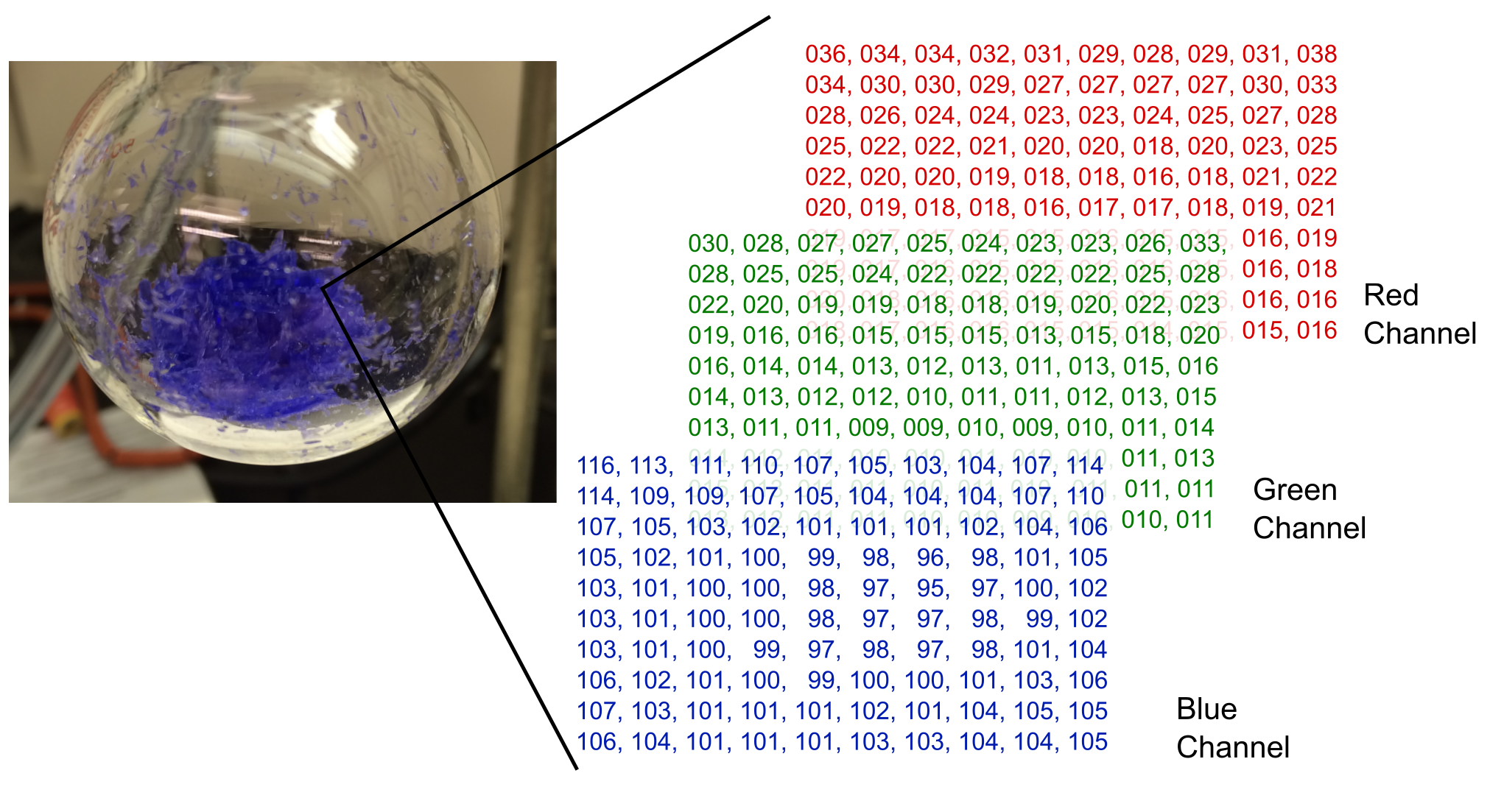

Color images are slightly more complicated to represent because all necessary colors cannot be represented by single integers from 0 \(\rightarrow\) 255. Probably the most popular way to digitally encode colors is RGB, which describes every color as a combination of red, green, and blue (Figure 2). These are also known as color channels, and this is typically how computer monitors display colors. If you look closely enough at the screen, which may require a magnifying glass for high-resolution displays, you can see that every pixel is really made up of three lights: a red, a green, and a blue. Their perceived color is a mixture or blend of the red, green, and blue values. Since every pixel now has three values to describe it, a NumPy array that defines a color image is three-dimensional. The first two dimensions are the height and width of the image, and the third dimension contains values from each of the three color channels.

[row, column, channel]

Figure 2 An excerpt of the red, green, and blue color channels for a small portion of a color image. The values in each channel represent the brightness of that color in each pixel.

By scikit-image convention, the encoding of colors is in the order red, green, and then blue order, so the 0 channel is red, for example.

We can look at an example of a color photo by loading an image from the Hubble Space Telescope. This image is included with the scikit-image library for users to experiment with.

hubble = data.hubble_deep_field()

plt.imshow(hubble);

hubble

array([[[15, 7, 4],

[15, 9, 9],

[ 9, 4, 8],

...,

[18, 11, 5],

[16, 19, 10],

[15, 10, 6]],

[[ 2, 7, 0],

[ 5, 11, 7],

[13, 19, 17],

...,

[11, 10, 5],

[13, 18, 11],

[ 9, 11, 6]],

[[10, 15, 9],

[13, 18, 14],

[18, 22, 23],

...,

[ 1, 2, 0],

[14, 15, 10],

[ 8, 14, 10]],

...,

[[19, 20, 14],

[15, 15, 13],

[13, 13, 13],

...,

[ 2, 6, 5],

[12, 14, 13],

[ 7, 9, 8]],

[[13, 10, 5],

[ 9, 11, 8],

[12, 18, 16],

...,

[ 5, 9, 8],

[ 6, 12, 10],

[ 7, 13, 9]],

[[21, 16, 12],

[10, 12, 9],

[ 9, 20, 16],

...,

[11, 15, 14],

[ 9, 18, 15],

[ 7, 18, 12]]], shape=(872, 1000, 3), dtype=uint8)

Looking at the array, you will notice that it is indeed three-dimensional with values residing in triplets. You may also notice that the numbers are rather small because most pixels in this particular image are near black. If we want to look at just the red values of the image, this can be accomplished by slicing the array. The red is the first layer in the third dimension, so we should slice it hubble[:, :, 0]. The brighter a group of pixels in the red channel image, the more red color that is present in that region.

plt.imshow(hubble[:,:,0], cmap='gray', vmin=0, vmax=255);

7.1.3 External Images#

Alternatively, images can be loaded from an external source using the io.imread() function provided by scikit-image. This function requires one argument to tell scikit-image which image the user wants to load. If your Jupyter notebook is in the same directory as the image you want to load, you can simply input the full file name, including the extension, as a string. Otherwise, you will need to include the full path to the file in addition to the name. For example, below is a photo of [Ni(CH\(_3\)CN)\(_6\)][BF\(_4\)]\(_2\) crystals, which is loaded into Python.

flask = io.imread('data/flask.png')

plt.imshow(flask);

If we look at the array for the flask image below, you will notice that this is a three-dimensional array with four color channels. This can happen in some file types such as Portable Network Graphics (PNG) where a fourth alpha color channel is supported, making the coding RGBA. This channel measures opacity, which is how non-transparent a pixel is. All of the pixels in this image are fully opaque, which is represented by 255. If the image was fully transparent, the alpha values would be all zeros, and anything in between would be translucent. PNG images support an alpha channel as do many image formats, but JPG/JPEG images do not support this feature.

flask

array([[[102, 86, 60, 255],

[107, 90, 63, 255],

[113, 95, 67, 255],

...,

[ 88, 72, 46, 255],

[ 90, 74, 48, 255],

[ 92, 76, 50, 255]],

[[103, 87, 61, 255],

[107, 90, 63, 255],

[112, 95, 67, 255],

...,

[ 88, 72, 46, 255],

[ 90, 74, 48, 255],

[ 93, 77, 51, 255]],

[[101, 85, 59, 255],

[107, 90, 62, 255],

[112, 95, 67, 255],

...,

[ 88, 72, 46, 255],

[ 91, 75, 49, 255],

[ 93, 77, 51, 255]],

...,

[[161, 156, 136, 255],

[161, 156, 136, 255],

[161, 156, 136, 255],

...,

[ 18, 15, 10, 255],

[ 19, 16, 9, 255],

[ 20, 17, 10, 255]],

[[160, 155, 135, 255],

[161, 156, 136, 255],

[161, 156, 136, 255],

...,

[ 18, 15, 10, 255],

[ 18, 15, 9, 255],

[ 20, 17, 10, 255]],

[[160, 155, 135, 255],

[160, 155, 135, 255],

[161, 156, 136, 255],

...,

[ 19, 16, 11, 255],

[ 18, 15, 9, 255],

[ 20, 17, 10, 255]]], shape=(600, 744, 4), dtype=uint8)

7.1.4 Colormaps#

When matplotlib deals with a NumPy array, it treats it as generic data, not an image. The human mind does not effectively handle data on this scale, so to make it easier for humans to interpret, matplotlib maps the values to colors according to the colormap on the right. This is known as false color because the colors in the image are not the real image colors. By default, the colormap viridis is used, but there are many other colormaps available to choose from in matplotlib. Below is the red color channel from the Hubble image displayed, so using the plt.imshow() function.

import matplotlib.pyplot as plt

plt.imshow(hubble[:,:,0])

plt.colorbar();

To change colormaps, input the name of a different colormap as a string in the optional cmap argument (e.g., plt.imshow(hubble[:,:,0], cmap='magma')). See https://matplotlib.org/examples/color/colormaps_reference.html for a list of available colormaps. It is strongly encouraged to use one of the perceptually uniform colormaps because they are more accurately interpreted by humans and also show up as a smooth, interpretable gradient when printed on a grayscale printer. Below is the display of the Hubble image red channel using the Reds colormap.

plt.imshow(hubble[:,:,0], cmap='Reds', vmin=0, vmax=255);

7.1.5 Saving Images#

After processing an image, it is sometimes helpful to save the image to disk for records, reports, and presentations. The plt.savefig() function works just fine if executed in the same Jupyter cell as the plt.imshow() function. Alternatively, scikit-image provides an image saving function io.imsave(file_name, array) that operates similarly to plt.savefig() except with a couple of image-specific arguments. One key difference is that plt.savefig() does not take an array argument but instead assumes you want the recently displayed image saved while io.imsave(file_name, array) takes an array and can save an image even if it has not been displayed in the Jupyter notebook. Check the directory containing the Jupyter notebook, and there should be a new file titled new_image.png.

io.imsave('new_img.png', hubble)

7.2 Basic Image Manipulation#

The scikit-image library along with NumPy also provide a variety of basic image manipulation functions such as adjusting the color, managing how the data is numerically represented, and establishing threshold cutoff values.

7.2.1 Colors#

There are numerous ways to represent colors in digital data. The RGB color space is undoubtedly one of the most popular color spaces, but there are others that you may encounter, such as HSV (hue, saturation, value) or XYZ. Scikit-image provides functions in the color module for easily converting between these color spaces, and Table 2 lists some common functions. See the scikit-image website for a more complete list.

Table 2 Common Functions from the color Module

Function |

Description |

|---|---|

|

Converts from RGB to grayscale |

|

Converts grayscale to RGB; by just replicating the gray values into three color channels |

|

HSV to RGB conversion |

|

XYZ to RGB conversion |

Below, a color image is converted into a grayscale image.

hubble_gray = color.rgb2gray(hubble)

hubble_gray

array([[0.03326941, 0.04029412, 0.02098392, ..., 0.04727412, 0.0694651 ,

0.04225137],

[0.0213051 , 0.03700627, 0.06894431, ..., 0.03863529, 0.06444235,

0.04005686],

[0.05296039, 0.06529059, 0.08322392, ..., 0.00644431, 0.05657647,

0.04877098],

...,

[0.07590157, 0.05825804, 0.05098039, ..., 0.01991333, 0.05295255,

0.03334471],

[0.04030196, 0.04062235, 0.06502275, ..., 0.03167804, 0.04149333,

0.04484941],

[0.06578078, 0.04454392, 0.06813373, ..., 0.05520745, 0.06224 ,

0.0597251 ]], shape=(872, 1000))

You will notice that scikit-image takes a three-dimensional data structure, the third dimension being the color channels, and converts it to a two-dimensional, grayscale structure as expected. One detail that may strike you as different is that the values are decimals. Up to this point, grayscale images were represented as two-dimensional arrays of integers from 0 \(\rightarrow\) 255. There is no rule that says lightness and darkness values need to be represented as integers. Above, they are presented as floats from 0 \(\rightarrow\) 1. This brings us to the next topic of encoding values.

7.2.2 Encoding#

Encoding is how the values are presented in the image array. The two most common are integers from 0 \(\rightarrow\) 255 or floats from 0 \(\rightarrow\) 1. However, there are other ranges outlined in Table 3. The difference between signed integers (int) and unsigned integers (uint) is that unsigned integers are only positive integers starting with zero, while signed integers are both positive and negative centered approximately around zero. The approximate part is because there are equal numbers of positive and negative integers, and since zero is a positive integer, zero is not the exact center. To determine what the range of values is for an image, scikit-image provides the function skimage.dtype.limits().

Scikit-image also provides some convenient functions for converting to various value ranges described in Table 3. These functions are not contained in a module, so you will need to just do an import skimage to get access, which was done at the start of this chapter. The one format that probably needs commenting on is the Boolean format. In this encoding, every pixel is a True or False value, which is equivalent to saying 1 or 0. This is for black-and-white images where each pixel is one of two possible values.

Table 3 Scikit-Image Functions for Converting Data Types

Functions |

Description |

|---|---|

|

Converts to integers from 0 \(\rightarrow\) 255 |

|

Converts to integers from 0 \(\rightarrow\) 65535 |

|

Converts to integers from -32768 \(\rightarrow\) 32767 |

|

Converts to Boolean (i.e., |

|

Converts to floats from 0 \(\rightarrow\) 1 with 32-bit precision |

|

Converts to floats from 0 \(\rightarrow\) 1 with 64-bit precision |

# clipped negatives because images is 0 -> 1 but image dtype allows -1 -> 1

skimage.dtype_limits(hubble_gray, clip_negative=True)

(0, 1)

hubble_gray_uint8 = skimage.img_as_ubyte(hubble_gray)

skimage.dtype_limits(hubble_gray_uint8)

(0, 255)

If a grayscale image is encoded with floats from 0 \(\rightarrow\) 1, then it is necessary to set vmin=0 and vmax=255. These are the min and max possible values from the image and are used to ensure that the range of possible values extends completely across the colormap. If these two parameters are excluded, matplotlib will automatically adjust how the values map to colors to use the full range of the colormap in the displayed image.

7.2.3 Image Contrast#

Before trying to extract certain types of information or identify features in an image, it is sometimes helpful to first increase the contrast of an image. There are a number of ways of doing this, including thresholding and modification of the image histogram. Some approaches can be performed using NumPy array manipulation, but scikit-image also provides convenient functions designed for these tasks.

Thresholding can be used to generate a black-and-white image (i.e., not grayscale) by converting gray values at or below a brightness threshold to black and above the threshold to white. The threshold can be set manually or by an algorithm that chooses an optimal value customized to each image. We will start with manually setting a threshold. The grayscale image generated from rgb2gray() is encoded with floats from 0 \(\rightarrow\) 1, so a threshold of 0.65 is chosen by experimentation. A black-and-white image is then generated as a Boolean. The resulting black-and-white image is shown below.

chem = data.immunohistochemistry()

chem_gray = color.rgb2gray(chem)

plt.imshow(chem_gray, cmap='gray', vmin=0, vmax=1);

chem_bw = skimage.img_as_ubyte(chem_gray > 0.65)

# above generates a Boolean encoding

plt.imshow(chem_bw, cmap='gray', vmin=0, vmax=1);

The appropriate threshold may vary from image to image, so manually setting a value is not always practical. Scikit-image provides a number of functions, shown below in Table 4, from the filters module for automatically choosing a threshold. If you are not sure which of the functions below to use, there is a try_all_filters() function in the filters module that will try seven of them and plot the results for easy comparison.

Table 4 Threshold Functions from the filters Module

Functions |

Description |

|---|---|

|

Threshold value from ISODATA method |

|

Threshold value from Li’s minimum cross entropy method |

|

Threshold mask (array) from local neighborhoods |

|

Threshold value from mean grayscale value |

|

Threshold value from minimum method |

|

Threshold mask (array) from the Niblack method |

|

Threshold value from Otsu’s method |

|

Threshold mask (array) from Sauvola method |

|

Threshold value from triangle method |

|

Threshold value from Yen method |

Note

Threshold value functions provide a single threshold value while threshold masks provide arrays of values the size of the image. They are used in the same fashion except that the latter provides a per-pixel threshold.

Below, we can see the Otsu filter being demonstrated.

from skimage import filters

threshold = filters.threshold_otsu(chem_gray)

chem_otsu = skimage.img_as_ubyte(chem_gray > threshold)

plt.imshow(chem_otsu, cmap='gray', vmin=0, vmax=1);

Another method for increasing contrast is by modifying the image histogram. If the values from an image are plotted in a histogram, you will see something that looks like the following.

from skimage import exposure

hist = exposure.histogram(chem_gray)

plt.plot(hist[0])

plt.xlabel('Values')

plt.ylabel('Counts');

This is a plot of how many of each type of brightness value is present in the image. There are practically no pixels in the image that are black (value 0) or completely white (value 255), but there are two main collections of gray values. The contrast of this image can be increased by performing histogram equalization, which spreads these values out more evenly. The exposure module provides an equalize_hist() function for this task.

chem_eq = exposure.equalize_hist(chem_gray)

plt.imshow(chem_eq, cmap='gray', vmin=0, vmax=1);

Histogram equalization does not produce a black-and-white image, but it does make the dark values darker and the light values lighter. If we look at the histogram for this image, it will be more even as shown below.

hist = exposure.histogram(chem_eq)

plt.plot(hist[0])

plt.xlabel('Values')

plt.ylabel('Counts');

7.3 Scikit-Image Examples#

The scikit-image library contains numerous functions for performing various scientific analyses - so many that they cannot be comprehensively covered here. Below is a selection of some interesting examples that are relevant to science, including counting objects in images, entropy analysis, and measuring eccentricity of objects. The examples below use mostly synthetic data to represent various data you might encounter in the lab. Real data can be easily extracted from publications but are not used here for copyright reasons.

7.3.1 Blob Detection#

A classic problem that translates across many scientific fields is to count spots in a photograph. A biologist may need to quantify the number of bacteria colonies in a petri dish over the course of an experiment, while an astronomer may want to count the number of stars in a large cluster. In chemistry, this problem may occur as a need to quantify the number of nanoparticles in a photograph or using the locations to calculate the average distances between the particles.

The good news is that the scikit-image library provides three functions that will take a photograph and return an array of xyz coordinates indicating where the blobs are located in the image. If all you care about is the number of blobs, simply find the length of the returned array. There are three functions listed below which include Laplacian of Gaussian (LoG), Difference of Gaussian (DoG), and Determinant of Hessian (DoH). The LoG algorithm is the most accurate but the slowest, while the DoH algorithm is the fastest. These functions only accept two-dimensional images, so if it is a color image, you will need to either convert it to grayscale or select a single color channel to work with.

skimage.feature.blob_log(image, threshold=)

skimage.feature.blob_dog(image, threshold=)

skimage.feature.blob_doh(image, threshold=)

dots = io.imread('data/dots.png')

plt.imshow(dots);

An image of black dots on a white background is imported above, but the blob detection algorithms work best with light colors on a dark background. We will invert the image below by subtracting the values from the maximum value or using the color.rgb2gray().

dots_inverted = color.rgb2gray(255 - dots)

or

dots_inverted = skimage.util.invert(dots)

dots_inverted = color.rgb2gray(255 - dots)

plt.imshow(dots_inverted, cmap='gray', vmin=0, vmax=1);

To detect the blobs, we will use the blob_dog() function as demonstrated below. The function allows for a threshold argument to be set to adjust the sensitivity of the algorithm in finding blobs. A lower threshold results in smaller or less intense blobs to be included in the returned array.

from skimage import feature

blobs = feature.blob_dog(dots_inverted, threshold=0.5)

blobs

array([[1096. , 847. , 26.8435456],

[ 565. , 453. , 26.8435456],

[ 892. , 1097. , 26.8435456],

[ 980. , 283. , 26.8435456],

[1021. , 1534. , 16.777216 ],

[ 596. , 949. , 16.777216 ],

[ 531. , 1632. , 16.777216 ],

[ 120. , 877. , 16.777216 ],

[ 258. , 1308. , 16.777216 ],

[ 383. , 888. , 16.777216 ],

[ 391. , 1346. , 26.8435456],

[ 251. , 219. , 26.8435456]])

The returned array includes three columns corresponding to the y position, x position, and intensity of each spot, respectively. The x and y coordinates for an image starts at the top left corner while typical plots start at the bottom left. Keep this in mind when comparing the coordinates to the image. To confirm that scikit-image found all the blobs, we can plot the coordinates on top of the image to see that they all line up. This is demonstrated below.

plt.imshow(dots_inverted, cmap='gray', vmin=0, vmax=1)

plt.plot(blobs[:,1], blobs[:,0], 'rx');

To find the number of spots, determine the length of the array using the len() Python function or looking at the shape of the array.

len(blobs)

12

7.3.2 Entropy Analysis#

The term entropy outside of the physical sciences is used to represent a quantification of disorder or irregularity. In image analysis, this disorder is the amount of pixel (brightness or color) variation within a region of the image. As you will see below, entropy is the highest near the boundaries and in noisy areas of a photograph. This makes an entropy analysis useful for edge detection, checking for image quality, and detecting alterations to an image.

The filters.rank module contains the entropy() function shown below. It works by going through the image pixel-by-pixel and calculating the entropy in the neighborhood, which is the area around each pixel. An entropy value is recorded in the new array at each location and can be plotted to generate an entropy map. The entropy function takes two required arguments: the image (img) and a description of the neighborhood called a structured element (selem).

filters.rank.entropy(img, selem)

from skimage.morphology import disk

from skimage.filters.rank import entropy

selem = disk(5)

selem

array([[0, 0, 0, 0, 0, 1, 0, 0, 0, 0, 0],

[0, 0, 1, 1, 1, 1, 1, 1, 1, 0, 0],

[0, 1, 1, 1, 1, 1, 1, 1, 1, 1, 0],

[0, 1, 1, 1, 1, 1, 1, 1, 1, 1, 0],

[0, 1, 1, 1, 1, 1, 1, 1, 1, 1, 0],

[1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1],

[0, 1, 1, 1, 1, 1, 1, 1, 1, 1, 0],

[0, 1, 1, 1, 1, 1, 1, 1, 1, 1, 0],

[0, 1, 1, 1, 1, 1, 1, 1, 1, 1, 0],

[0, 0, 1, 1, 1, 1, 1, 1, 1, 0, 0],

[0, 0, 0, 0, 0, 1, 0, 0, 0, 0, 0]], dtype=uint8)

The neighborhood is defined as an array of ones and zeros. In this case, it is a disk of radius 5. The user can adjust this value to the needs of the analysis.

chem_gray_int = skimage.util.img_as_ubyte(chem_gray) #convert img to int

S = entropy(chem_gray_int, selem)

plt.imshow(S)

plt.colorbar();

Examination of the image shows that there is an increase in entropy near the edges of the features in the image as expected. There are two regions (blue) that contain unusually low entropy. If you look back at the original image, these regions are comparatively homogeneous in color.

7.3.3 Eccentricity#

Eccentricity is the measurement of how non-circular an object is. It runs from 0 \(\rightarrow\) 1 with zero being a perfect circle and larger values representing more eccentric objects. This can be useful for quantifying the shape of nanoparticles or droplets of liquid. The measure module from scikit-image provides an easy method of measuring eccentricity. First, let us import an image of ovals as an example. Alternatively, you are welcome to use the coins image from the data module, but this will require some preprocessing such as increasing the contrast.

ovals = io.imread('data/ovals.png')

plt.imshow(ovals);

The main function for measuring eccentricity is the regionsprops() function, but this function by itself cannot find the objects. Luckily, there is another function in the measure module called label() that will do exactly this, and this function requires the regions to be light with dark backgrounds. The following inverts the light and dark and also truncates the alpha channel from the RGBA image.

ovals_invert = color.rgb2gray(255 - ovals[:,:,:-1])

plt.imshow(ovals_invert, cmap='gray', vmin=0, vmax=1);

The regionsprops() function returns the properties of the two ovals in a list of lists. The first list corresponds to the first object and so on. Each list contains an extensive collection of properties, so it is worth visiting the scikit-image website to see the complete documentation. We are only concerned with eccentricity right now, so we can access the eccentricity of the first object with props[0].eccentricity, which gives a value of about 0.95 for the first object while the second object has a much lower value of about 0.40. This makes sense since the first object is very eccentric while the second object is much more circular.

from skimage.measure import label, regionprops

lbl = label(ovals_invert)

props = regionprops(lbl)

props[0].eccentricity

0.9469273936534165

props[1].eccentricity

0.39666071911272044

Further Reading#

The scikit-image library with NumPy are likely all you will need for a vast majority of your scientific image processing, and the scikit-image project webpage is an excellent course of information and examples. The gallery page is particularly worth checking out as it provides a large number of examples highlighting the library’s capabilities. In the event there is an edge case the scikit-image cannot do, the pillow library may be of some use. Pillow provides more fundamental image processing functionality such as extracting metadata from the original file.

Scikit-image Website. http://scikit-image.org/ (free resource)

Pillow Documentation Page. https://pillow.readthedocs.io/en/stable/ (free resource)

Tanimoto, S. L. An Interdisciplinary Introduction to Image Processing: Pixels, Numbers, and Programs MIT Press: Cambridge, MA, 2012.

Exercises#

Complete the following exercises in a Jupyter notebook. Any data file(s) referred to in the problems can be found in the data folder in the same directory as this chapter’s Jupyter notebook. Alternatively, you can download a zip file of the data for this chapter from here by selecting the appropriate chapter file and then clicking the Download button.

Import the image titled NaK_THF.jpg using scikit-image.

a) Convert the image to grayscale using a scikit-image function.

b) Save the grayscale image using the

iomodule.Load the chelsea image from the scikit-image

datamodule and convert it to grayscale. Display the image using the scikit-image plotting function and display it a second time using a matplotlib plotting function. Why do they look different?Generate a 100 \(\times\) 100 pixel image containing random noise generated by a method from the

np.randommodule such asrandom()orintegers()(see section 4.7). Display the image in a Jupyter notebook along with a histogram of the pixel values. Hint: you will need to flatten the array before generating the histogram plot.Write your own Python function for converting a color image to grayscale. Then find the source code for the scikit-image

rgb2gray()function available on the scikit- image website and compare it to your own function. Are there any major differences between your function and the scikit-image function?Import an image of your choice either from the data module or of your own and convert it to a grayscale image.

a) Invert the grayscale image using NumPy by subtracting all values from the maximum possible value

b) Invert the original grayscale image using the

invert()function in the scikit- imageutilmoduleImport a color image of your choice either from the

datamodule or of your own and calculate the sum of all pixels from each of the three color channels (RGB). Which color (red, green, or blue) is most prevalent in your image?The folder titled glow_stick contains a series of images taken of a glow stick over the course of approximately thirteen hours along with a CSV file containing the times at which each image was taken in numerical order. Quantify the brightness of each image and generate a plot of brightness versus time.

The JPG image file format commonly used for photographs degrades images during the saving process due to the lossy compression algorithm while the PNG image file format does not degrade images with its lossless compression algorithm.

a) To view how JPG distorts images, import the nmr.png and nmr.jpg images of the same NMR spectrum. Subtract the two images from each other and visualize this difference to see the image distortions caused by JPG compression.

b) Which of the above file formats is better for image-based data in terms of data integrity?

Import the image spots.png and determine the number of spots in the image using scikit-image. Plot the coordinates of the spots you find with red x’s over the image to confirm your results. If your script missed any spots, speculate as to why those spots were missed.

The image test_tube_altered.png has been altered using photo editing software. Generate and plot an entropy map of the image to identify the altered regions.

Steganography is the practice of hiding information in an image or digital file to avoid detection. The file hidden_img.png was created by combining an image with pseudorandom noise to mask the original image. Perform an entropy analysis on the image to reveal the original image. You may need to adjust the size of the selection element (

selem) to detect the hidden image.